We’re living in an era where AI has become the driving force behind digital transformation. As Google CEO Sundar Pichai put it,

Yet, this revolution is as complex as it is promising.

According to McKinsey’s State of AI 2025 report, 78% of organizations now use AI in at least one business function, up from 55% just two years ago. Despite this rapid adoption, fewer than one in four companies say they’ve captured significant financial value from their AI initiatives. This underscores that success lies not in adopting AI tools, but in integrating them strategically.

AI holds immense potential to elevate efficiency, creativity, and decision-making, but its true power emerges only when it’s embedded into a well-designed digital transformation strategy.

In this step-by-step guide, we’ll walk through how to move from experimentation to enterprise-wide transformation and help your organization implement AI with clarity, precision, and measurable impact.

Why So Many AI Initiatives Underdeliver?

AI promises transformational value, yet most organizations never get close to realizing it. An analysis reports that only 5% of more than 1,250 companies are seeing measurable business value from their AI investments.

The pattern behind AI failure becomes clearer when you look beyond the surface and examine why those attempts collapse. Almost every failure traces back to one root issue: AI is bolted onto existing processes instead of reshaping them. The symptoms simply show up in different forms:

1. Strategy Without Scale

Companies often launch impressive pilots, a chatbot here, an automation workflow there, but none of it compounds into enterprise-wide change. The reason? Pilots aren’t tied to a broader operating model or value chain redesign. Without a “how this scales” blueprint, AI remains a local experiment, not a business lever.

2. Weak Data Foundations

AI needs structured, governed, and accessible data to function. Yet Database Trends and Applications reports that 62% of organizations cite poor data governance as their most significant barrier, and only 12% say their data is even ready for AI deployment.

This isn’t a tech problem; it's an operating model issue. Without disciplined data ownership, cross-functional standards, and a single source of truth, AI models simply inherit the chaos.

3. Talent and Culture Gaps

Many firms invest in AI tools but never redesign roles or decision-making norms to use them. When employees aren’t trained or incentivized to apply AI insights, models sit idle. Transformation fails not because algorithms are wrong, but because people continue to work in pre-AI ways.

4. Siloed Ownership

When AI is treated as an IT initiative instead of a business initiative, it gets isolated from strategy, P&L accountability, and frontline feedback. This kills adoption. Successful AI transformation requires joint ownership between tech teams and business leaders who understand the daily realities of customers, operations, and revenue flows.

5. Lack of Governance and Trust

Even the most accurate models won’t be used if people don’t trust them. Without clear model explainability, ethical safeguards, and transparent governance, employees revert to manual decision-making. The result is low utilization, which quietly destroys ROI.

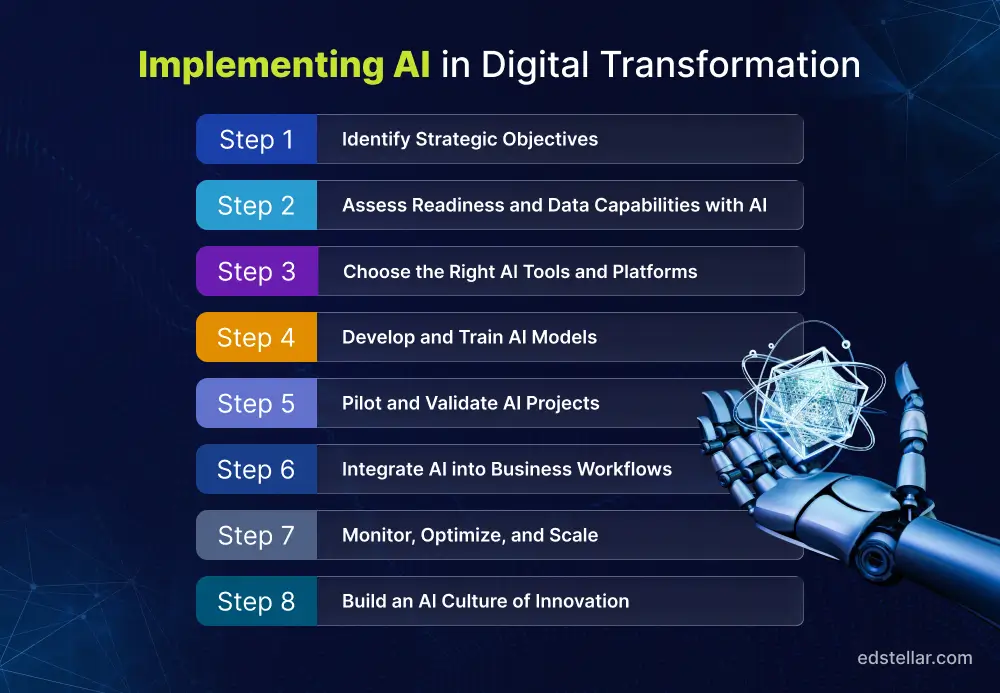

Implementing AI in Digital Transformation

Now that we’ve seen why AI initiatives often stall, let’s shift to what success looks like.

Leading companies approach AI implementation as an ongoing evolution where people, data, and technology move together toward measurable impact.

Here’s a clear, actionable framework to help you embed AI at the core of your digital transformation, turning ambition into sustained business results.

Step 1: Identify Strategic Objectives

AI transformation begins with clarity. Before exploring models or tools, define where AI can change business performance, not where it simply “fits.”

Pinpoint High-Impact Problems

Look for issues that already show up in your KPIs, P&L, or customer metrics:

- Chronic forecasting or planning breakdowns

- High churn or slow service at critical touchpoints

- Manual workflows limit scale

- Fragmented data slowing down decisions

AI should address measurable operational or financial pain, not generic “innovation goals.”

Translate Priorities into AI Use Cases

Once you know the business problems, the next step is turning them into specific, actionable AI use cases.

A use case is only real if you can define the metric, the AI method, and the data needed.

Map Each Problem to the Right AI Capability:

- Forecasting problems → Prediction models - Use this for demand planning, inventory optimization, or workforce capacity. It works when you have enough historical data and clear seasonality patterns.

- Slow or manual workflows → Automation models - Best for claims processing, ticket routing, or document extraction. The process must be repeatable and supported by structured or semi-structured data.

- Customer drop-offs → Personalization models - Useful for product recommendations, targeted offers, or journey sequencing. You need strong behavioral and transaction data to make this effective.

- High risk or failure rates → Risk and anomaly detection models - Works for churn prediction, fraud detection, or equipment failure alerts. You need historical event data and clearly defined risk thresholds.

A Simple Rule for Validation:

A use case should only move forward if you can answer three questions clearly:

- What business metric will this improve?

- Which AI technique solves it?

- Do we have the data to build it?

If any answer is unclear, the use case is not ready.

Set Measurable Outcomes

Once you’ve identified the right use cases, the next step is to set clear, measurable outcomes. This prevents AI projects from drifting into vague experimentation or improving metrics that don’t matter to the business.

To do this, define each objective using a simple structure: From X → To Y → By When → Owned By Whom. This ensures every goal is specific, time-bound, and tied to an accountable leader, not just an abstract improvement target.

For example, strong AI outcomes look like this:

- Reduce downtime from 8% to 4% in 12 months

- Increase forecast accuracy from 70% to 90% within four quarters

- Cut customer response time from 24 hours to 2 hours across priority segments

These work because they’re precise, quantifiable, and directly linked to operational performance. Clear outcomes also guide everything that follows model design, data requirements, pilot criteria, and scalability decisions.

Step 2: Assess Readiness and Data Capabilities with AI

Before AI can scale, the organization needs a stable foundation, clean data, modern systems, and teams capable of acting on insights. Most failures happen here, long before a model is built.

Evaluate Your Digital Ecosystem

This means assessing whether your data sources are unified, accessible, and consistently labeled. If your systems force teams to reconcile conflicting versions of the truth, or if your cloud and integration layer cannot support high-volume, real-time workloads, scaling AI beyond isolated pilots will not be feasible.

Strengthen Data Quality and Governance

AI only performs as well as the data it receives, which makes ownership, quality controls, lineage tracking, and secure access essential. Compliance with frameworks such as GDPR, HIPAA, or regional data mandates is not just regulatory housekeeping it is the backbone of trustworthy AI.

Build Scalable, Modern Architecture

Organizations need cloud-native environments, modular pipelines, and real-time data flows that support continuous learning and rapid model deployment. Without this, even successful pilots collapse when pushed to enterprise scale.

Assess Talent and Organizational Readiness

Finally, assess talent and organizational readiness. Technology does not scale by itself; people do. Ask:

- Do business leaders understand how to interpret AI outputs?

- Do teams know how to translate model insights into operational decisions?

- Where are the capability gaps in analytics, engineering, and domain expertise?

A readiness assessment clarifies where upskilling and structural changes are required.

Step 3: Choose the Right AI Tools and Platforms

Choosing an AI platform is a strategic decision, not a feature comparison exercise. The platform you select must align with your data maturity, integration landscape, and governance requirements; otherwise, AI will never scale beyond isolated pilots.

Start with Enterprise Requirements, Not Features

Start by defining your enterprise requirements. Before looking at tools, confirm whether the platform can integrate cleanly with your core systems, such as ERP, CRM, IoT platforms, and your data lake. Also, ensure it supports your security, compliance, and data-residency needs across all markets. If a platform cannot operationalize models across business units and instead only works in small pilots, it will stall your AI roadmap, no matter how advanced it looks on paper.

Evaluate Core AI Ecosystems

Once the requirements are clear, evaluate the major enterprise AI ecosystems. Platforms like Azure AI, Google Vertex AI, AWS SageMaker, and IBM Watsonx all offer end-to-end capabilities for model development, deployment, monitoring, and governance. The real question isn’t which platform is “best,” but which one fits your architecture with minimal friction and reduces long-term operational overhead.

Match the Platform to Your Maturity Level

Your platform choice must also match your team’s maturity level. Early-stage teams often benefit from low-code or AutoML tools that speed up experimentation without heavy engineering work. Mature teams with deeper skills may need full MLOps frameworks like MLflow, Kubeflow, or Weights & Biases to automate training, monitoring, and large-scale operations. Selecting a platform that is too advanced for your organization’s current capability is one of the fastest ways to stall pilots and delay value.

Prioritize Governance and Responsible AI

Equally important is governance. Any platform you choose must support explainability, bias detection, audit trails, access controls, and compliance monitoring. Without these guardrails, AI adoption will fail as soon as it reaches regulated or high-risk functions.

Explore our in-depth guide on The Role of AI in Digital Transformation in 2026

Step 4: Develop and Train AI Models

Once your data and platforms are ready, the next step is building AI models that solve real business problems. This is where AI moves from concept to impact, forecasting more accurately, detecting issues earlier, and automating decisions at scale.

Build a Cross-Functional Development Team

Model development is not just a technical exercise. It requires data scientists, ML engineers, domain experts, and IT working together.

Without business input, models become technically impressive but operationally useless.

A strong team ensures the model reflects real workflows, decision logic, and operational constraints.

Choose the Right Modeling Approach for the Problem

Different problems require different AI techniques. The goal is not to use the “fanciest” model, but the right fit for the data and the use case.

- Predictive analytics work well for forecasting or risk scoring.

- NLP models help with customer conversations, documents, and service bots.

- Computer vision supports inspections, quality checks, and safety monitoring.

Choose a method that performs reliably with the data you actually have

not the data you wish you had.

Train, Validate, and Stress-Test the Model

Before a model reaches production, it must be tested thoroughly. Training should use clean, representative data. Validation must mimic real-world conditions, not ideal scenarios.

You must check for bias, drift, blind spots, and ensure the output can be explained clearly to business stakeholders.

UPS did this well: their ORION routing model saved millions in fuel and miles not because it was “advanced,” but because it was rigorously tested and validated before deployment.

Use MLOps to Scale and Sustain Performance

MLOps ensures AI systems stay accurate, secure, and reliable long after deployment. It automates the monitoring, retraining, versioning, and compliance steps that keep models from drifting or silently failing. Without MLOps, even well-built models degrade over time; with it, AI becomes a stable, repeatable business capability rather than a one-off experiment.

A good example of this is Bosch’s predictive maintenance work for a global diesel engine manufacturer. Bosch applied predictive analytics to years of engine sensor data and built a model that could predict failures up to two weeks in advance with nearly 100% accuracy, preventing breakdowns, cutting warranty costs, and improving delivery reliability. The reason this solution sustained value wasn’t just the model itself, but the disciplined monitoring and lifecycle management wrapped around it.

Step 5: Pilot and Validate AI Projects

Pilots are the safest way to test AI in real operating conditions before you commit to full-scale deployment. The goal is not experimentation for its own sake; it's to prove that AI delivers measurable value, is usable by the business, and is reliable under real-world constraints.

Choose High-Impact, Data-Ready Pilots

A strong pilot begins with a problem that matters. Focus on use cases with clear financial or operational value, supported by clean, accessible data, and owned by a business leader who is accountable for results. Pilots fall apart when teams try to "fix the data later" or when no one is responsible for the outcome.

Run Cross-Functional Pilot Teams

AI only succeeds when technical and business teams work together. Data scientists ensure the model is accurate, domain experts shape real-world decision logic, IT manages integration and security, and end-users validate whether the solution fits actual workflows. Without this alignment, pilots become technically strong but operationally irrelevant.

Define Success Before You Start

Every pilot must have clear definitions of "success." Technical metrics, such as accuracy, precision, drift, and latency, show whether the model works.

Business metrics cost savings, cycle-time reduction, fewer errors, and revenue lift, show whether it is worth scaling. If you can't measure the impact, you can't justify rollout.

Use a Stage-Gate Approach

Not every pilot should move forward. Use structured checkpoints to evaluate performance, data stability, workflow fit, regulatory risks, and adoption. If a pilot fails one of these criteria, stop it early. Scaling weak pilots drains resources and kills momentum.

Prepare for Scaling Before the Pilot Ends

When a pilot proves its value, the groundwork for scale must already be in place. That means solid data pipelines, clear governance, compliance safeguards, user training, and defined integration paths into systems like ERP or CRM. Scaling should feel like a continuation of the pilot, not a reset.

Step 6: Integrate AI into Business Workflows

Pilots only matter if they change how work actually gets done. This step is about moving AI out of labs and slide decks and wiring it into the day-to-day decisions that run the business.

Embed AI Where Decisions Are Made

AI should sit inside core processes, not in a separate dashboard that people might check “if they have time.”

That means connecting models directly to planning, supply chain, customer service, finance, risk, quality, and field operations so that recommendations or actions appear in the tools people already use.

Coca-Cola, for example, has been using AI to power both creative marketing campaigns and supply chain decisions. They’re piloting AI-driven suggestions for retailers that recommend which SKUs to order next and how to stock them, based on historical sales, weather, and geolocation data. That’s AI embedded in commercial and supply chain workflows, not just analytics reports.

The principle is simple: if employees need to “go to another system” to see AI insights, adoption will stay low. The output has to show up where the decision is made.

Integrate Models into Core Systems and Data Pipelines

To scale, AI must plug cleanly into your existing technology stack. Models need reliable inputs from ERPs, CRMs, IoT platforms, and data lakes, then push outputs back into those same systems as triggers, recommendations, or automated actions.

That means building APIs, event-driven integrations, and data pipelines that keep models fed with current data and keep business systems updated with model outputs. If integration is fragile or manual, the AI program will stall as soon as you try to scale beyond a few pilots.

Automate Monitoring, Retraining, and Governance

Once AI is in production, the risk shifts from “will this work?” to “will this stay reliable and safe over time?”

You need automatic monitoring for performance, drift, latency, and usage; clear processes for retraining and versioning; and audit trails for who changed what and when. Bias checks, access controls, and compliance reviews should be part of the standard lifecycle, not ad hoc fixes after something goes wrong.

Without this discipline, models quietly decay, trust erodes, and teams fall back to manual work, killing the value you fought to create.

Make Human–AI Collaboration the Default

Integrating AI isn’t just a tech exercise; it’s a workflow and role-design exercise. People need to know:

- Where AI is used in their process

- How to interpret its recommendations

- When to rely on it and when to override it

That often means updating SOPs, redefining responsibilities, and training teams on new decision flows. The smoother and clearer the workflow, the faster AI becomes a natural part of daily operations instead of “yet another tool” they’re asked to use.

Step 7: Monitor, Optimize, and Scale

Deploying AI isn’t the end of the journey. Models drift, data changes, and business conditions evolve. Long-term value depends on constant monitoring, disciplined optimization, and scaling only when the system proves it can handle real operational load.

Monitor Models and Business Impact in Real Time

AI performance must be tracked with the same rigor as financial and operational KPIs.

This includes monitoring accuracy, drift, latency, data quality, user adoption, and the actual business outcomes the model is supposed to influence, whether that’s cost, cycle time, customer experience, or risk reduction.

Continuously Improve Through Feedback and Iteration

AI systems must evolve with the business.

That means gathering feedback from end-users on workflow friction, overrides, and false positives; reviewing business unit input on KPI impact; and analyzing system logs for drift or reliability issues.

Netflix continuously iterates on its recommendation engine using large-scale A/B testing. According to Wired, the company runs hundreds of A/B tests each year, often involving over 100,000 users per test, to refine recommendations as viewer behavior shifts. This constant experimentation is what keeps its algorithms aligned with real-world user patterns.

Scale Only After Operational Proof

A pilot that works in one domain won’t necessarily perform the same way across regions, teams, or business units.

Before scaling, verify that data definitions are consistent, integration is feasible, workflows are aligned, and security and compliance requirements hold up. Most enterprise failures happen when teams try to scale too early.

Scaling should feel like a continuation of the pilot, not a reinvention.

Maintain Momentum Through Governance and Workforce Enablement

AI programs grow only when governance and people grow with them.

This includes updating governance as AI expands into sensitive processes, retraining teams on new workflows and decision patterns, and conducting regular model audits for fairness, explainability, and compliance.

Strong governance and a skilled workforce aren’t supporting elements; they’re the operating system of enterprise AI. Without them, adoption stalls and models lose trust.

Step 8: Build an AI Culture of Innovation

Technology may ignite an AI program, but culture determines whether it scales. Organizations that succeed with AI reshape how teams work, learn, and make decisions, not just their tools.

Make Experimentation Part of Daily Work

AI improves only through constant testing and iteration. That requires giving teams the space, data access, and authority to experiment inside real workflows, not isolated innovation labs.

Create fast-cycle pilots, maintain AI sandboxes connected to real data, and run monthly model-review sessions to decide what to refine, scale, or retire. Innovation must operate as a recurring rhythm, not a one-off initiative.

Build AI Literacy Across the Organization

AI adoption stalls when only data teams understand how the technology works. Every role needs functional AI fluency: frontline staff interpreting outputs, managers making AI-assisted decisions, analysts validating predictions, and leaders reviewing AI-driven KPIs.

PwC reports that roles requiring AI skills are growing 3.5× faster than others. Broad literacy is now a baseline requirement, not an optional investment.

Redesign Incentives and Decision Rights

Culture shifts when incentives do. Reward employees for using AI insights, identifying automation opportunities, reducing manual exceptions, and improving model adoption.

If performance systems reward old behaviors, AI-enabled behaviors will never scale, regardless of the technology’s quality.

Ensure Leaders Model Data-Driven Behavior

Teams follow what leaders demonstrate, not what they announce. Executives must routinely use AI dashboards, push for evidence-based decisions, and support teams when AI recommendations challenge intuition. Consistent leadership behavior accelerates cultural adoption more than any training program.

Tie Innovation Directly to Business Outcomes

Experimentation only matters when it strengthens performance. Link innovation efforts to measurable outcomes, revenue uplift, cost reduction, cycle-time improvements, error reduction, or new capabilities added.

AI culture collapses when innovation becomes theatre. It thrives when experimentation reliably improves the business.

Ready to Build an AI-Ready Workforce?

Technology can only take you as far as your people. Most AI initiatives fail not because the models are wrong, but because teams lack the skills to use, govern, and scale them.

Edstellar helps enterprises close these capability gaps with structured, role-based AI training programs aligned directly to your digital strategy.

Programs for Enterprise Teams:

- AI for Everyone Corporate Training: Build organization-wide literacy so employees understand AI outputs, make data-driven decisions, and work effectively alongside automated workflows.

- AI for Data Scientists Corporate Training: Deep technical upskilling in generative AI, model development, evaluation, deployment, and MLOps practices designed for teams building and scaling enterprise AI solutions.

Edstellar works with organizations to design learning pathways that map to your operating model, governance standards, and transformation roadmap so your workforce is ready for real-world AI execution, not just theory.

So, start your AI readiness journey today.

Conclusion

AI doesn’t transform a business; leaders do.

The organizations that win aren’t the ones deploying the most models, but the ones that redesign how decisions are made, how data flows, and how people work alongside intelligent systems.

Across industries, the pattern is consistent: companies that treat AI as an operating model change, not a technology upgrade, are the only ones scaling value. They invest in data foundations, cross-functional ownership, rigorous governance, and continuous workforce upskilling.

If you take one lesson from this guide, let it be this: AI maturity is not measured by pilots launched, but by business outcomes sustained.

Build disciplined data pipelines. Deploy AI where decisions actually happen. Monitor relentlessly. Upskill your people.

And treat transformation as ongoing, not a project with an end date.

In a landscape where technology shifts every quarter, the advantage goes to organizations that adapt faster than AI evolves.

Frequently Asked Questions

Continue Reading

Explore High-impact instructor-led training for your teams.

#On-site #Virtual #GroupTraining #Customized

Bridge the Gap Between Learning & Performance

Turn Your Training Programs Into Revenue Drivers.

Schedule a ConsultationEdstellar Training Catalog

Explore 2000+ industry ready instructor-led training programs.

Coaching that Unlocks Potential

Create dynamic leaders and cohesive teams. Learn more now!

Want to evaluate your team’s skill gaps?

Do a quick Skill gap analysis with Edstellar’s Free Skill Matrix tool

Transform Your L&D Strategy Today

Unlock premium resources, tools, and frameworks designed for HR and learning professionals. Our L&D Hub gives you everything needed to elevate your organization's training approach.

Access L&D Hub Resources.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)