Enterprise AI is evolving from single-purpose chatbots to agentic AI, systems of autonomous agents that plan, learn, and execute tasks. According to a recent PagerDuty survey, over half of companies are already deploying “agentic AI” solutions, and by 2027, as many as 86% expect to be operational with AI agents. Organizations see high upside: 62% of leaders expect triple-digit ROI from agentic AI, often by automating 26–50% of workloads. However, deploying agents at scale requires more than a powerful LLM; it needs the right framework, architecture, and organizational readiness.

This blog discusses the evolution of the AI agents framework from chatbots to agentic AI, autonomous systems that plan, learn, and execute complex tasks with high ROI potential. It outlines key AI agents components and compares frameworks like LangChain, CrewAI, and AutoGen for building these agents. It also emphasizes selecting the right framework, training teams, and applying agents in use cases to drive efficiency.

What are AI Agents and Frameworks?

AI agents are autonomous programs that proactively pursue complex goals by planning, acting, and adapting without constant human input. Unlike reactive chat assistants, they break down tasks, use tools and APIs, and leverage memory to execute multi-step workflows. They integrate large language models, reasoning, and external data sources to automate processes and make decisions. Enterprises value them for automating workloads and delivering high ROI, per recent surveys.

In the context of AI agents, frameworks are software platforms or libraries that provide the infrastructure to build, manage, and deploy autonomous agents. They combine components like memory management, tool integration, reasoning/planning logic, and workflow orchestration to augment foundation models. These frameworks simplify agent development by offering pre-built tools for task coordination, API connectivity, and memory handling, enabling scalable, efficient automation of complex tasks.

According to PagerDuty’s recent survey, enterprises are increasingly adopting AI agents for their transformative potential:

- 52% of firms anticipate agents automating 26–50% of workloads.

- 62% expect over 100% ROI from agentic AI implementations.

- 51% of companies report having already deployed AI agents, moving beyond pilot projects.

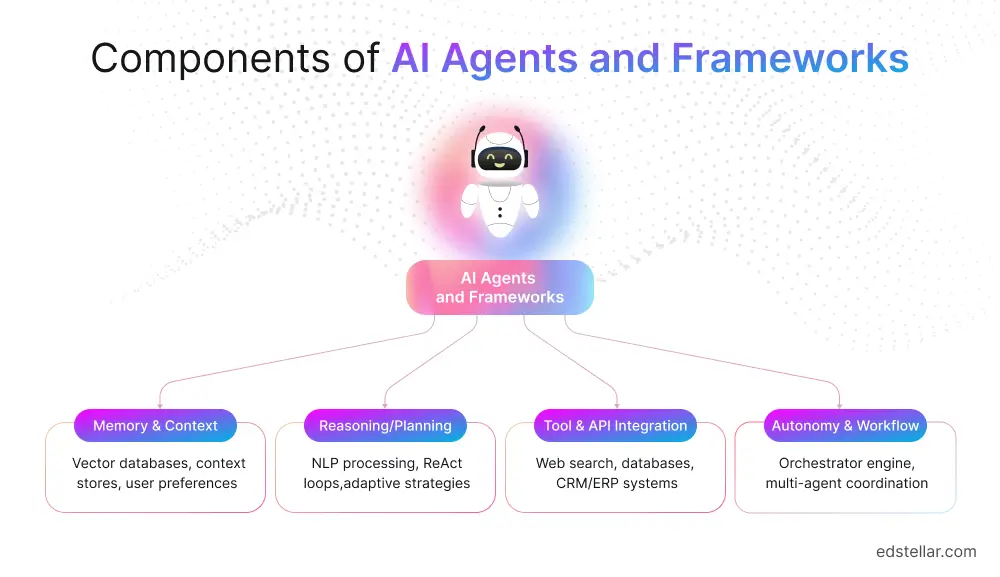

Building an AI agent involves integrating several key components that enable autonomous, goal-driven behavior. These components, such as memory, reasoning/planning, tool integration, autonomy, and frameworks, work together to allow agents to function as proactive, adaptive systems rather than simple reactive programs.

Below are some of the components for AI agents:

- Memory and Context: Agents maintain memory (persistent or temporary) to store prior steps, user instructions, and outcomes. This ensures continuity in multi-step tasks, preventing the agent from starting from scratch each time. Memory can include vector databases or context stores to preserve history, user preferences, or task progress. For example, an agent handling a customer query might recall previous interactions to tailor their response.

- Reasoning/Planning: Agents break down high-level goals into manageable subgoals. Using natural language processing (NLP) and other inputs, they assess the current situation, decide on next steps, and adapt based on feedback or intermediate results. Architectures like ReAct (reason + act loops) or search-based planning strategies guide this process, enabling agents to dynamically adjust their approach.

- Tool and API Integration: A hallmark of AI agents is their ability to interact with external tools, such as web search, databases, enterprise APIs (e.g., CRM, ERP), or other AI models. Agents use function calling to access these tools, filling knowledge gaps or performing specific tasks. Frameworks provide standardized protocols and utilities to seamlessly integrate APIs, query engines, or other services, ensuring robust tool connectivity.

- Autonomy & Workflow Management: Agents operate autonomously through an orchestrator or workflow engine that governs task progression. In multi-agent systems, a central “director” or coordinator agent sequences interactions among specialized agents. For instance, in a customer support workflow, the orchestrator might route an issue to a billing agent or a technical support agent based on the query. This ensures efficient task delegation and execution.

Ultimately, AI agent frameworks provide the infrastructure to combine these components into a cohesive system. They augment foundation models (e.g., GPT-4, Llama, or IBM’s Granite) with specialized logic for memory management, tool integration, and control flow.

Popular frameworks include LangChain and LlamaIndex, which offer tools for building agents with memory stores, API connectors, and workflow orchestration. For example, LangChain supports LangGraph, a module for creating multi-agent workflows where agents collaborate on complex tasks. Similarly, AutoGen enables multi-agent systems with customizable agent roles (e.g., planner, executor) and shared memory for coordination.

Frameworks often include pre-built protocols for function calling, vector database integration, and task orchestration, simplifying the development of scalable, production-ready agents.

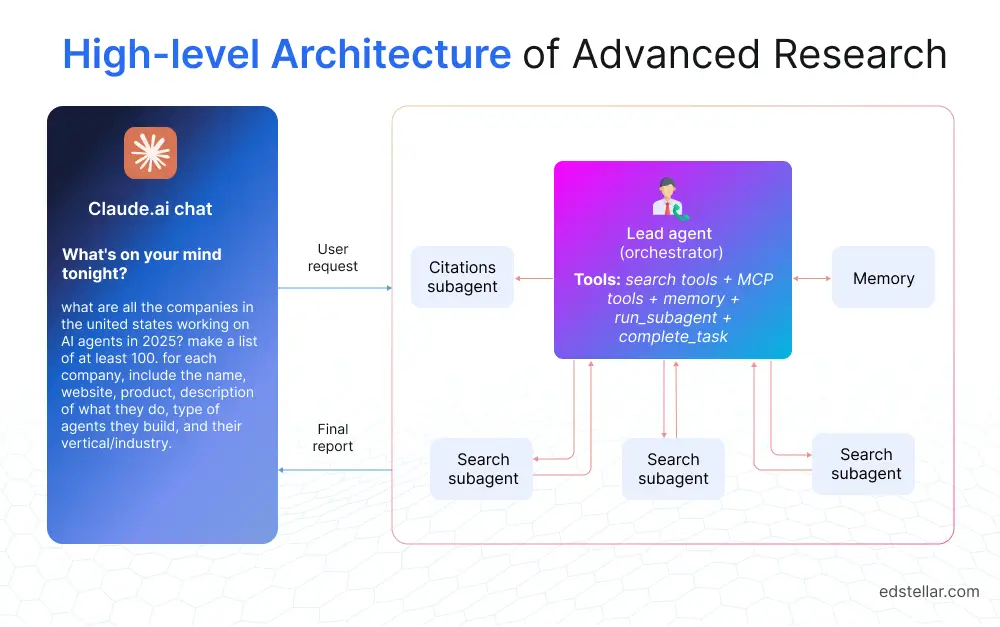

For instance, Anthropic’s multi-agent system uses a framework where a Lead Researcher agent delegates tasks to parallel Subagents (e.g., crawlers, search agents), coordinating via shared memory to synthesize results efficiently.

These components, supported by robust frameworks, enable AI agents to function as proactive team members. Modern agents leverage foundation models for language understanding, while frameworks add the necessary structure for planning, tool use, and workflow management. This combination allows agents to tackle complex, multi-step tasks with minimal human intervention.

Example (Architecture Diagram): The Anthropic Research multi-agent system visualizes this orchestration: a Lead Researcher agent parses a complex query, plans a research strategy, and spawns parallel Subagents (e.g., crawlers, search agents) to gather information in parallel. These subagents each call web search tools and collect facts.

The Lead agent uses a shared memory to track progress and, once results come in, synthesizes an answer. This orchestrator-worker pattern, with a “digital symphony” of coordinated agents, is common in advanced agent frameworks.

The figure below, from Anthropic, illustrates a lead agent dividing tasks among subagents to maximize parallelism and efficiency (this example is from a research assistant use case).

Leading AI Agent Frameworks (Real-World Platforms)

Several open-source AI agent frameworks have emerged to simplify building and deploying AI agents. These platforms provide reusable architectures, integration layers, and developer tools so teams don’t have to code every component from scratch.

Key examples include:

- LangChain (and LangSmith): LangChain is a widely used framework for chaining LLM calls into applications. It offers modular abstractions (chains, tools, agents, memory) that developers can configure. LangChain excels at simple agentic workflows (single or few-step tasks) and supports vector databases for memory, function-calling for tools, and event-based chain execution.

Its companion platform, LangSmith, adds debugging and monitoring. For example, a LangChain agent can sequentially call an LLM, then a search API, and update a vector DB. LangChain’s popularity means it has broad community support, though it can be complex for beginners.

- LangGraph: Built on top of LangChain, LangGraph lets developers define graph-based workflows of agents. Instead of a linear chain, LangGraph represents tasks as nodes and transitions as edges, with the state stored between steps. This approach suits cyclical or conditional workflows. For instance, in a travel booking agent, each action (find flights, select, pay) is a node; if a user rejects a flight, the graph can loop back to the “find flights” node. LangGraph excels when human-in-the-loop or branching decisions are needed.

- CrewAI: CrewAI adopts a role-based, multi-agent paradigm. It treats agent teams as a “crew” of workers. Each agent in CrewAI is given a role, goal, and task description in natural language. The framework manages collaboration: tasks can execute sequentially or hierarchically, with one manager agent overseeing others. CrewAI includes templates (e.g., stock market analysis crew with “analyst”, “researcher”, “strategy” agents).

It supports many LLMs (Claude, Gemini, Mistral, GPT, etc.) and has built-in RAG (retrieval-augmented generation) tools for accessing data. The philosophy is structured teamwork: define each agent’s part and let CrewAI handle the coordination. It offers a no-code interface for prototyping, making it friendlier for non-experts. However, its structured workflows may be less flexible for highly unexpected tasks. - Microsoft AutoGen: AutoGen is Microsoft’s multi-agent framework aimed at real-time applications. It has three layers: the Core layer (a distributed programming framework with tracing/debugging for agent networks), AgentChat (a starter kit for conversational agents), and Extensions (plugins to interface with external libraries).

AutoGen emphasizes asynchronous messaging and offers tools like AutoGen Bench (for benchmarking agent performance) and a no-code studio for building agents. It is well-suited for composing teams of LLM agents (e.g., one agent writes a plan, another criticizes it) but currently focuses on Microsoft’s ecosystem. - Semantic Kernel (MSFT): Semantic Kernel is Microsoft’s open-source AI toolkit. Its Agent Framework (experimental) provides core classes for creating agents, and its Process Framework orchestrates multi-step workflows. SK is designed for enterprise developers, integrating smoothly with Azure services. It comes with built-in agent types (a chat assistant and an “AI assistant”) and allows organizing the steps of an agent’s tasks via a process object.

For example, a SK process might define steps like “analyze email” and “generate reply”, passing outputs between them. Deep Microsoft integration (e.g., with Dynamics or Outlook) is a strength, but the framework is evolving, and some parts remain experimental. - LlamaIndex: Formerly “GPT Index”, LlamaIndex is an open-source data orchestration framework. It’s focused on connecting data sources (docs, DBs, APIs) to generative models. LlamaIndex recently added a “workflows” feature for multi-agent systems. In this model, each workflow step is an agent action (a node) triggered by events, with shared context allowing dynamic, asynchronous transitions.

This event-driven approach avoids rigid graphs; agents can iterate, loop, or branch based on results. LlamaIndex is ideal for tasks like question-answering over enterprise data: an agent retrieves relevant documents (via an index), another summarizes, etc.

- MetaGPT and ChatDev: These are multi-agent frameworks modeled as a simulated software company. In MetaGPT, a suite of agents assumes roles (e.g., “planner”, “coder”, “tester”) and communicates via documents and diagrams according to standard operating procedures. Similarly, ChatDev represents developers as dialogue-based agents passing messages to produce code and docs.

Both use OpenAI’s GPT models and aim to automate software engineering tasks by having specialized agents collaborate. MetaGPT’s research paper highlights using multi-collaborative design patterns to break down tasks among agents. These frameworks are especially interesting for R&D and automation of code pipelines, though they are more experimental than LangChain or CrewAI. - AutoGPT: AutoGPT is an open-source agentic framework built on GPT-4. It autonomously decomposes a user’s goal into a sequence of subtasks. Agents in AutoGPT call each other in an automated workflow. For example, one agent might generate a marketing plan outline, then spawn agents to research each section. It was popular for prototyping independent AI agents, though it lacks the structural rigor of more polished platforms. It’s essentially a proof-of-concept that LLMs can self-invoke multi-step processes.

Ultimately, each framework has strengths. LangChain and SK are general-purpose and well-supported. CrewAI and AutoGen focus on multi-agent orchestration with role/task abstractions. LlamaIndex excels at plugging into data. Graph-based (LangGraph) vs event-driven (LlamaIndex) workflows suit different needs.

Microsoft tools (SK, AutoGen) integrate into that ecosystem. Enterprise teams should experiment: IBM recommends starting small (a single-agent pilot) to evaluate ease of use and fit. Ultimately, the best choice depends on use-case complexity, developer expertise, and existing infrastructure.

Selecting and Evaluating Frameworks

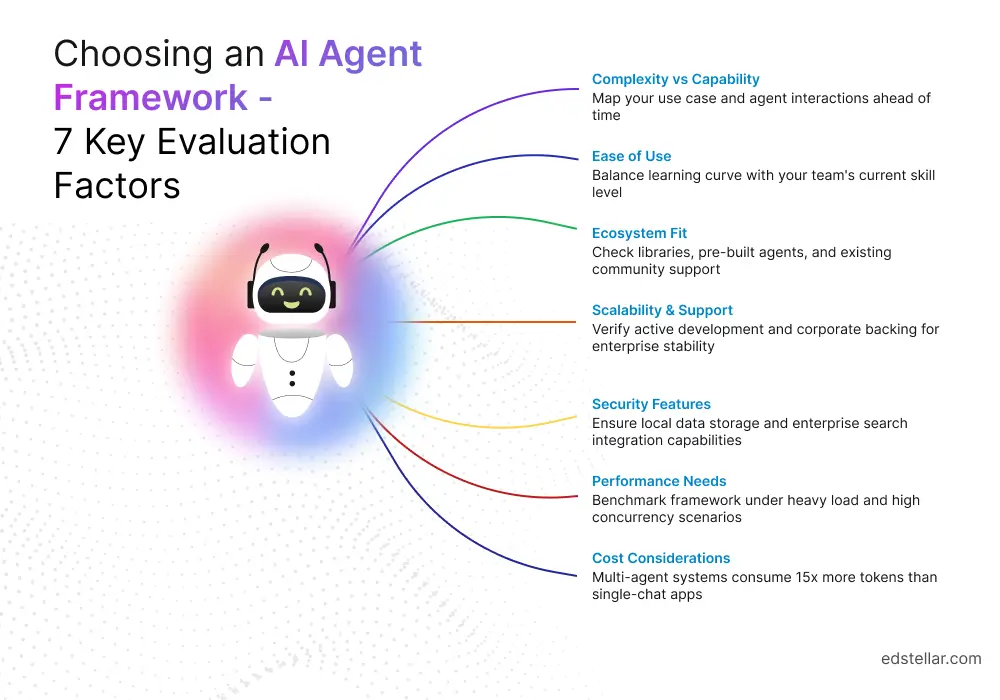

With many options available, how should a company pick an AI agent framework? Key factors include:

- Complexity vs Capability: What workflow do you need? Simple, single-agent tasks (e.g., an FAQ bot that can also make a database query) might only need LangChain or a basic agent chain. More complex pipelines (automated reporting, research, multi-department coordination) call for multi-agent orchestration (CrewAI, AutoGen, MetaGPT). Map your use case and agent interactions ahead of time. IBM recommends considering whether you need a single agent or a full team of agents.

- Ease of Use: Consider your team’s skill level. Some frameworks have low-code/no-code options. For instance, CrewAI and AutoGen offer graphical studios or templates for rapid prototyping. LangChain and LlamaIndex are Python libraries requiring code, which may suit ML engineers. Balance the learning curve: LangChain is powerful but can be complex for beginners, whereas smaller tools like Botpress (for simple chat) require less expertise but are limited in scope.

- Ecosystem Fit: Check the ecosystem of libraries, pre-built agents, and community support. LangChain has a vast community, many tutorials, and integrations (vector stores, maps, search). If your org is Microsoft-centric, Semantic Kernel or Azure’s native agents might plug into existing tools. If you use AWS or open-source LLMs, consider frameworks agnostic to the cloud. The AI21 Labs guide notes, e.g., that companies tied to Microsoft tools may benefit from the semantic kernel’s integrations.

- Scalability & Support: Enterprise projects need stability. Verify if the framework is under active development and has corporate backing. LangChain, LlamaIndex, and Semantic Kernel have strong backing. Some niche frameworks or community projects (e.g., experimental MetaGPT bots) might lack long-term support.

- Security Features: Look at how the framework handles sensitive data. Does it allow local data storage? Can you plug in enterprise search indices safely? For example, frameworks offering built-in RAG should support private data sources. Ensure the agent can run behind your security perimeter if needed.

- Performance Needs: Some frameworks are designed for quick prototyping (AutoGPT) but struggle under heavy load. Others like AutoGen are explicitly built for distributed performance. If you anticipate high concurrency (many agents at once), benchmark the framework in a stress test.

- Cost: Open-source frameworks themselves are free, but consider the compute costs. Multi-agent systems typically consume more tokens/compute than single-chat apps (Anthropic notes multi-agent uses ~15x tokens of a single chat). Using LLM APIs can become expensive, so check if frameworks allow plugging in on-prem/LLama-like models.

In essence, the right framework aligns with your enterprise’s needs. IBM’s guidance is to start small (single agent pilot) to compare frameworks’ ease of development and integration. This empirical approach, combined with the factors above, will help find the best fit.

Train Your Teams and Drive Adoption

Even the best technology fails without people to wield it effectively. Training and culture are pivotal for AI success. PagerDuty reports that 61% of companies prioritize company-wide AI agent workshops, and 52% plan formal mentorship programs to boost adoption.

McKinsey finds that ~48% of employees consider formal training on AI tools the most effective way to enhance usage, while insufficient training is a top reason pilot projects fail. To maximize AI’s potential, companies must invest in role-specific upskilling, leveraging structured programs like those from Edstellar to equip teams with tailored corporate AI skills.

- For Technical Teams (Developers, ML Engineers, Data Scientists): Hands-on workshops and sandbox environments are essential. Corporate training like Generative AI with PyTorch provides technical training on building and integrating AI models, ideal for engineers and developers crafting production systems.

AI for Developers covers algorithms, frameworks, and application integration, offering a broad foundation. Train teams on “prompt engineering” best practices and connecting large language model (LLM) outputs to enterprise APIs, as seen in pilot projects like an HR chatbot.

AI for Database Administrators equips DBAs with skills to optimize data management and automate queries, enhancing backend efficiency. Encourage experimentation through coding bootcamps paired with mentorship to build expertise, using small cross-functional “AI teams” to develop initial agents as knowledge bases.

- For Business/Decision-Makers (PMs, Managers, Execs): Leaders need strategic insights into AI’s capabilities and limitations. AI for Managers aligns AI initiatives with business goals, while AI for Project Managers teaches integration into planning, risk assessment, and resource allocation.

Also, AI for Business Analysts empowers analysts to leverage AI for predictive modeling and process optimization. Share case studies (e.g., automated customer service or HR virtual assistants) and ROI data (PagerDuty: 171% ROI on average). Address governance, risk, and ethics with courses like Responsible Generative AI, ensuring safe and compliant deployment. Bessemer Venture Partners emphasizes that executives must champion upskilling to drive adoption.

- For Functional Teams: Role-specific training ensures practical application. AI for Graphic Designers accelerates design processes while preserving creativity, and AI for Content Writers enhances brainstorming and editing with LLMs. AI for System Administrators focuses on automating and optimizing backend systems.

AI For Everyone demystifies AI concepts for all employees, fostering a baseline understanding across roles. For teams building interaction models, Building Intelligent Chatbots and Agentic AI trainings cover chatbots and autonomous systems for customer or internal tools.

- Building an AI Center of Excellence: Establish a community of practice to curate frameworks, share lessons (e.g., red and green flags), and maintain standards like naming conventions and security reviews. Regular lunch-and-learns or brown bag sessions sustain momentum. Training like Responsible Generative AI reinforces ethical standards across teams.

The key insight from industry research is that culture drives AI adoption. Employees are eager to use AI but need clear benefits and competence. Transparent communication, such as explaining “why are we using these agents?” and alignment with real pain points, makes training effective.

Use Cases and Business Impact

AI agents find a natural fit in tasks that are process-driven or data-intensive. Common enterprise applications include:

- Customer Service Automation: Agents can handle complex queries end-to-end. For example, an AI agent could retrieve a customer’s order history, diagnose an issue, query a knowledge base for solutions, and draft a response; all autonomously. Unlike chatbots, the agent remembers context and can follow up (e.g., tracking shipment, initiating a return) without repeated user prompts. AI agents are proactive, working autonomously to achieve a specific objective by any means at their disposal. Orchestration enables handoff: if the agent hits a roadblock, it escalates to a human agent seamlessly.

- Knowledge Management: Tools like LlamaIndex and LangChain enable agents to search internal documents, manuals, or codebases to answer queries, with LlamaIndex workflows using event-driven steps, shared context, and asynchronous execution for dynamic, flexible multi-agent systems. For example, a help-desk agent can ingest company wikis, retrieve relevant answers, and track ongoing issues using its memory, efficiently linking related tickets.

- Data Analysis & Insights: Multi-agent systems can accelerate research. For example, finance teams have agents scanning news, reports, and social media; one agent extracts company names, others evaluate sentiment, and a strategist agent compiles a market outlook. As Anthropic showed, a multi-agent research system outperformed a single agent by 90% on complex information retrieval tasks. This means analysts can “instruct” the agent network to deliver a report, then refine it over iterations.

- Process Automation: Companies are building agents to automate internal workflows. An AI agent could manage procurement by analyzing usage data, identifying reorder points, interacting with suppliers (via APIs or email), and updating inventory systems. In HR, AI agents might screen resumes, schedule interviews, and draft offer letters, working through tasks across different software tools. The advantage of an agent here is its ability to integrate with multiple systems (CRM, ERP, payroll) and to learn from the workflow steps.

These use cases cut across industries (telecom, banking, and healthcare are often cited). The common theme is that agents reduce manual toil in repetitive or complex tasks.

Conclusion

AI agent frameworks are rapidly maturing, offering a powerful paradigm for enterprise automation. Unlike standalone AI tools, agents orchestrate workflows with autonomy, persistence, and learning, driving efficiency gains when deployed strategically. Open-source platforms like LangChain, CrewAI, and AutoGen democratize agent development, providing accessible “digital worker” building blocks.

To harness this potential, organizations must adopt a holistic approach. Technically, they should choose frameworks that align with their infrastructure, ensuring secure integration and robust pipelines. Strategically, investing in people is key. Platforms like Edstellar offer over 2,000 tailored training programs in Technical, Management, Leadership, Compliance, and Behavioral skills, upskilling developers and educating decision-makers on AI’s impacts and risks. Training and planning ultimately distinguish successful AI deployments from failures.

Start small, iterate, and leverage tools like Edstellar’s Skill Management Software to build expertise. With the right frameworks and skilled teams, agentic AI can transform workflows, boost agility, and drive innovation through real-world pilots.

🔗 Continue Reading

Explore High-impact instructor-led training for your teams.

#On-site #Virtual #GroupTraining #Customized

Bridge the Gap Between Learning & Performance

Turn Your Training Programs Into Revenue Drivers.

Schedule a ConsultationEdstellar Training Catalog

Explore 2000+ industry ready instructor-led training programs.

Coaching that Unlocks Potential

Create dynamic leaders and cohesive teams. Learn more now!

Want to evaluate your team’s skill gaps?

Do a quick Skill gap analysis with Edstellar’s Free Skill Matrix tool

Transform Your L&D Strategy Today

Unlock premium resources, tools, and frameworks designed for HR and learning professionals. Our L&D Hub gives you everything needed to elevate your organization's training approach.

Access L&D Hub Resources.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)