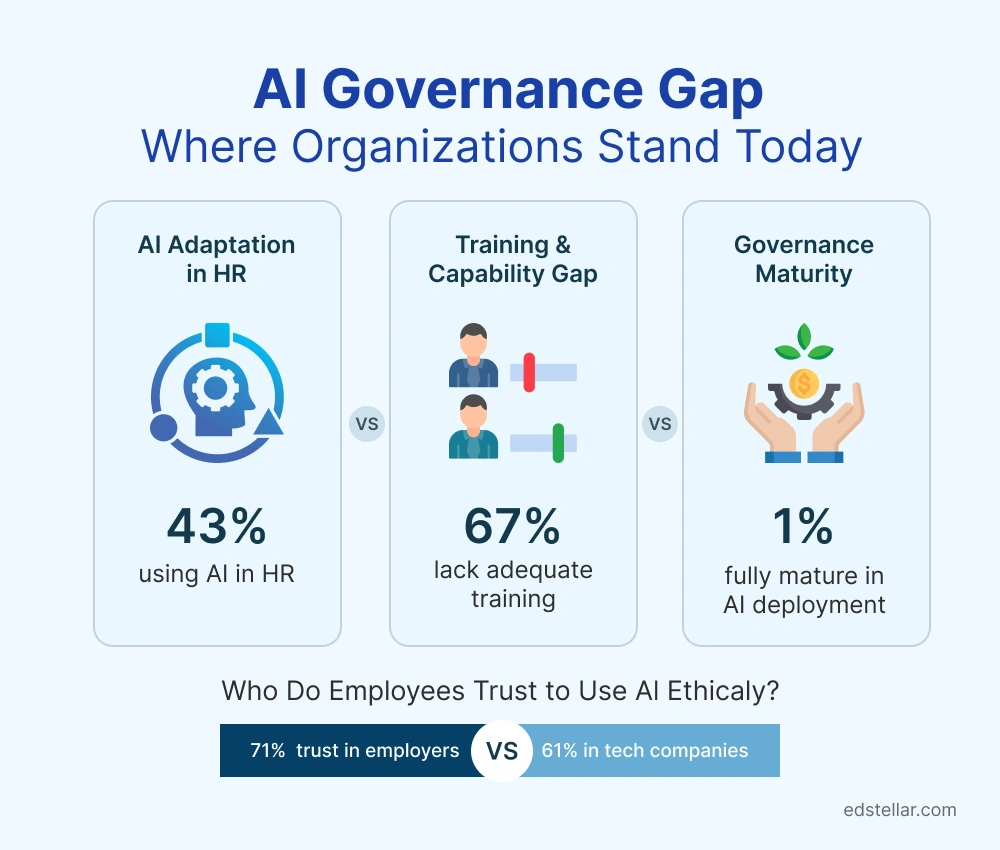

Artificial Intelligence has moved from a futuristic promise to an operational reality in workplaces worldwide. Yet while 92% of companies plan to increase their AI investments over the next three years, only 1% consider their AI deployment fully mature. This stark disconnect reveals a critical challenge: the AI governance gap.

For HR leaders, this gap represents both a risk and an opportunity. As AI reshapes everything from recruitment to performance management, the absence of robust, human-centric policies threatens to undermine employee trust, perpetuate bias, and expose organizations to compliance failures. With 71% of employees trusting their employers more than any other institution to deploy AI ethically, HR leaders hold a unique mandate to build governance frameworks that balance innovation with accountability.

Gebru’s point is that AI is being rolled out at scale while the rules for regulating algorithms and preventing biased datasets lag far behind. That gap leaves employees exposed to opaque, automated decision-making, making it essential for HR to step in with governance that actively protects people, not just systems.

This article explores the AI governance gap, examines why traditional approaches fall short, and provides actionable strategies for HR leaders to design policies that keep humans at the center of AI-driven transformation.

Understanding the AI Governance Gap in HR

The AI governance gap describes the widening chasm between AI adoption rates and the organizational structures, policies, and capabilities needed to deploy AI responsibly. This gap manifests in three critical dimensions:

The Adoption-Readiness Mismatch

While 43% of organizations now leverage AI in HR tasks, up from 26% in 2024, 67% of HR professionals report their organizations have not been proactive in training employees to work with AI technologies. This disconnect creates a dangerous scenario where powerful tools are deployed without adequate understanding of their limitations, ethical implications, or proper use cases.

As organizations race to implement AI-powered workforce management solutions, many overlook the critical foundation of governance and training that ensures responsible deployment.

The Trust Deficit

Employee concerns about AI are well-founded and widespread. More than half of U.S. workers cite cybersecurity (51%), inaccuracy (50%), and personal privacy (43%) as top concerns regarding generative AI in the workplace. Yet only 39% of C-suite leaders use benchmarks to evaluate their AI systems, and when they do, a mere 17% focus on ethical and compliance concerns.

Developing ethical leadership in the age of AI requires leaders who can navigate these complex trust dynamics and model responsible AI use.

The Speed-Safety Paradox

Organizations face mounting pressure to accelerate AI deployment 47% of C-suite executives believe their organizations are implementing AI too slowly. However, rushing implementation without proper governance creates risks, including algorithmic bias, privacy violations, and decisions that undermine rather than enhance the employee experience.

Why Traditional Governance Frameworks Fall Short

Traditional IT governance frameworks, while valuable for system security and data management, prove insufficient for AI governance in HR contexts. Here's why:

Technology-First vs. Human-First Orientation

Conventional governance models prioritize technical compliance, security protocols, and system performance. However, AI in HR fundamentally concerns human decisions who gets hired, promoted, or identified as flight risks. These decisions require frameworks centered on fairness, transparency, and human dignity, not just data integrity.

Building a people-first transformation strategy ensures that technology serves employees rather than the reverse, addressing the human side of change that traditional frameworks miss.

Static Policies in Dynamic Environments

Traditional policies assume relatively stable technologies and use cases. AI systems, by contrast, continuously learn and evolve. An AI recruitment tool that performs well initially may develop biases over time as it processes new data. Static annual audits cannot catch these drift patterns, which require continuous monitoring and adaptive governance.

Understanding how AI enhances change management processes helps leaders recognize why governance must be iterative and responsive rather than fixed and periodic.

Siloed Responsibility

In most organizations, IT manages technology, Legal handles compliance, and HR focuses on people processes. AI in HR disrupts these boundaries, creating accountability gaps. When an AI screening tool rejects qualified candidates from underrepresented groups, who is responsible IT for the algorithm, HR for the hiring process, or Legal for compliance?

The Business Case for Human-Centric AI Policies

Building human-centric AI governance isn't just an ethical imperative; it's a business necessity that drives tangible outcomes:

Enhanced Decision Quality

When Unilever redesigned its hiring process with AI supported by audited algorithms, bias-aware training data, and human oversight, it reported a 16% increase in diversity among selected candidates. Human-centric AI policies like these improve not just fairness, but the overall quality, defensibility, and consistency of hiring decisions.

Risk Mitigation

The regulatory landscape around AI is rapidly evolving. The EU AI Act, U.S. state-level regulations, and industry-specific requirements create complex compliance obligations. Organizations without robust governance frameworks face potential fines, lawsuits, and reputational damage. Proactive governance transforms compliance from a cost centre to a competitive advantage.

Employee Trust and Adoption

Employees resist AI because they don’t trust how it will be used. When governance is transparent, clear rules, explainable decisions, and visible human oversight are in place, AI shifts from a “black box” to a trusted tool. That trust is what drives voluntary adoption and sustained, everyday use.

Developing skills intelligence in HR tech alongside governance ensures that AI-driven capabilities translate into measurable improvements in talent management.

Productivity Gains

Some studies report that AI-powered recruitment tools have reduced time-to-hire by up to 50% in organizations that implemented them at scale, and free up 122 hours per employee annually for higher-value work. However, these benefits only materialize when employees trust and effectively use AI tools to achieve outcomes that require human-centric governance.

Key Components of Human-Centric AI Governance

Effective human-centric AI governance for HR comprises seven interconnected elements:

A. Ethical Principles and Values Framework

Start with clear principles that guide all AI decisions. These typically include:

- Fairness: AI systems must not discriminate based on protected characteristics

- Transparency: Employees should understand when and how AI influences decisions about them

- Accountability: Clear ownership for AI outcomes and decision-making authority

- Privacy: Robust data protection that exceeds regulatory minimums

- Human Agency: Humans retain ultimate decision-making authority on consequential matters

B. Cross-Functional Governance Structure

Human-centric AI governance requires representation from:

- HR leadership (owns people, processes, and outcomes)

- IT/Data Science (manages technical implementation)

- Legal/Compliance (ensures regulatory adherence)

- Ethics representatives (provide moral oversight)

- Employee representatives (bring workforce perspective)

Leading organizations establish an AI Center of Excellence (CoEs) where these functions collaborate. Research shows companies with AI CoEs and active HR involvement are 2.5 times more likely to successfully scale AI adoption.

C. Algorithmic Auditing and Monitoring

Regular audits should assess:

- Bias and fairness across demographic groups

- Accuracy and reliability of predictions and recommendations

- Model drift, where AI performance degrades over time

- Unintended consequences, such as gaming behavior or adverse impacts

Best practice calls for continuous monitoring with formal audits quarterly or whenever significant model changes occur.

D. Transparency and Explainability Requirements

Employees deserve to understand AI-driven decisions that affect them. This means:

- Clear communication about where AI is used in HR processes

- Explanations of how AI-generated recommendations were reached

- Access to human review for contested decisions

- Regular transparency reports on AI use and outcomes

E. Data Governance and Privacy Protections

AI systems are only as good as their training data. Human-centric governance requires:

- Strict data minimization (collect only what's necessary)

- Consent mechanisms for data use

- Regular data quality assessments

- Secure data handling and storage

- Clear data retention and deletion policies

F. Training and Change Management

Technology alone doesn't drive transformation; people do. Governance must include:

- AI literacy programs for all employees

- Specialized training for HR professionals who deploy AI

- Change management to address fears and build trust

- Continuous learning as AI capabilities evolve

Currently, only 35% of HR professionals feel equipped to use AI technologies.

Equipping HR teams with essential training topics for HR professionals that include AI governance and ethics is critical for closing this gap.

G. Incident Response and Continuous Improvement

Even well-designed systems will encounter issues. Governance frameworks need:

- Clear escalation paths when AI produces problematic outputs

- Processes to pause or roll back AI deployments when necessary

- Post-incident reviews to understand root causes

- Feedback loops that incorporate lessons learned into policy updates

Building Your AI Governance Framework: A Step-by-Step Approach

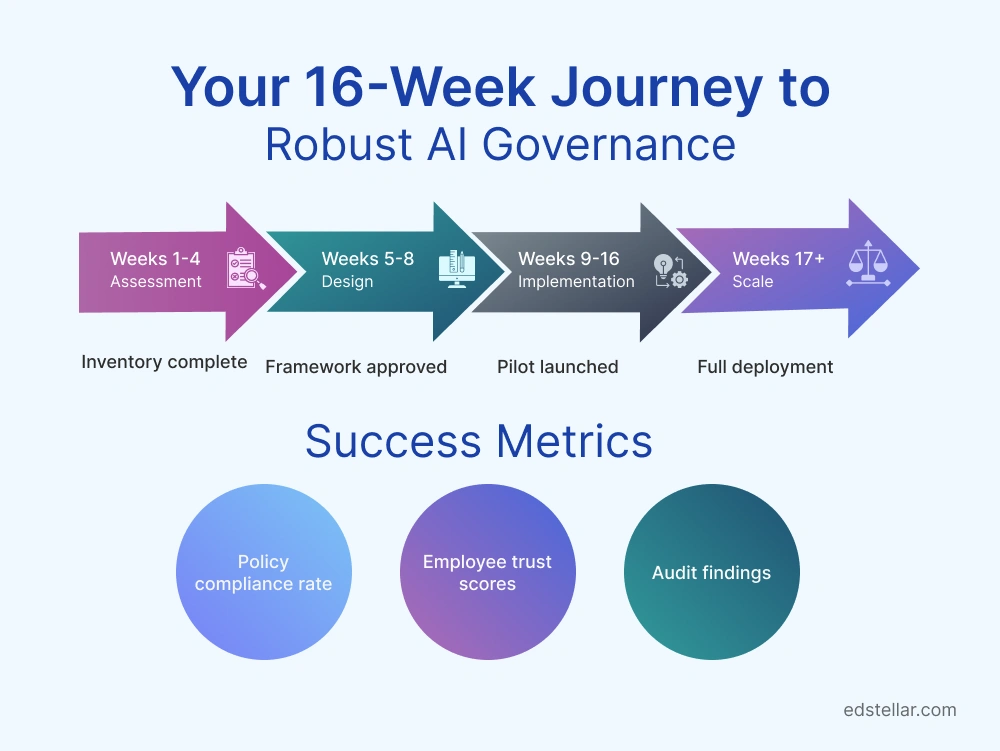

Implementing human-centric AI governance requires systematic planning and execution. Follow this roadmap:

Phase 1: Assessment and Preparation (Weeks 1-4)

Step 1: Inventory Current AI Use - Catalog all AI tools currently used in HR processes - Identify planned AI implementations in the next 12 months - Document vendor relationships and data flows

Step 2: Assess Current Capabilities - Evaluate existing governance structures and gaps - Assess employee AI literacy levels - Review current policies for AI coverage

Step 3: Define Governance Scope - Determine which AI applications require strict governance vs. light oversight - Identify high-risk use cases (e.g., hiring, termination decisions) - Prioritize governance implementation based on risk and impact

Phase 2: Framework Design (Weeks 5-8)

Step 4: Establish Governance Structure - Form AI Governance Board with cross-functional representation - Define roles, responsibilities, and decision-making authority - Create escalation paths for issues and concerns

Organizations can leverage proven change management frameworks to structure their governance implementation and ensure stakeholder buy-in.

Step 5: Develop Core Policies - Draft ethical principles document - Create AI use policies covering acceptable and prohibited applications - Develop transparency and communication standards - Establish audit and monitoring procedures

Step 6: Design Training Programs - Create an AI literacy curriculum for all employees - Develop specialized training for HR professionals - Plan change management and communication campaigns

Understanding common digital transformation challenges and solutions helps anticipate obstacles during governance rollout.

Phase 3: Implementation (Weeks 9-16)

Step 7: Pilot with High-Value Use Case - Select one high-impact, moderate-complexity use case - Implement full governance framework for pilot - Document lessons learned and iterate

Step 8: Deploy Training and Communication - Launch AI literacy programs - Communicate governance framework and policies - Establish feedback mechanisms

Step 9: Implement Monitoring Systems - Deploy technical monitoring for bias, accuracy, and drift - Establish dashboards for governance metrics - Create regular reporting cadence

Phase 4: Scale and Optimize (Week 17+)

Step 10: Expand to Additional Use Cases - Apply lessons from pilot to other AI implementations - Scale governance coverage across all HR AI tools

Step 11: Continuous Improvement - Quarterly governance framework reviews - Regular policy updates based on regulatory changes - Ongoing training refreshers and updates

Building a change-ready culture ensures that governance doesn't become a one-time project but an embedded organizational capability.

6. Overcoming Common Implementation Challenges

HR leaders implementing AI governance typically encounter these obstacles:

Challenge 1: Resistance from Leadership

Symptom: Executives view governance as bureaucracy that slows innovation and increases costs.

Solution: - Build business case with ROI projections (risk mitigation, improved decisions, employee trust) - Start with lightweight governance for low-risk applications - Demonstrate quick wins from pilot implementations - Frame governance as a competitive advantage, not a compliance burden

Challenge 2: Technical Complexity

Symptom: HR professionals feel overwhelmed by AI's technical aspects and lack confidence to lead governance efforts.

Solution: - Partner with IT/Data Science rather than trying to own technical details - Focus HR expertise on use cases, outcomes, and human impact - Invest in comprehensive AI training for corporate professionals - Use plain-language explanations in policies

Challenge 3: Resource Constraints

Symptom: Small HR teams lack bandwidth for governance in addition to existing responsibilities.

Solution: - Phase implementation over 6-12 months - Leverage vendor resources for technical audits - Use existing governance structures (e.g., expand data governance board scope) - Share resources across functions through CoE model

Challenge 4: Vendor Lock-In and Opacity

Symptom: AI vendors resist transparency about algorithms, making auditing difficult.

Solution: - Include governance rights in vendor contracts (audit access, explainability, bias testing) - Require regular vendor-provided bias and accuracy reports - Use third-party auditing services for independent validation - Consider governance requirements in vendor selection

Challenge 5: Employee Fear and Distrust

Symptom: Employees resist AI tools due to job security concerns or lack of trust in algorithmic decisions.

Solution: - Communicate transparently about AI use, purposes, and limitations - Demonstrate human oversight through governance structure - Provide mechanisms for employees to contest AI-driven decisions - Share audit results and corrective actions taken - Deploy effective change management tools to address resistance proactively

Measuring Success: KPIs for AI Governance

Effective governance requires clear metrics to track progress and identify issues. Key performance indicators should span four categories:

Technical Performance Metrics

- Algorithmic Fairness: Disparity ratios across demographic groups (target: < 1.2x)

- Accuracy Rates: Precision and recall for predictions (target: > 85%)

- Model Drift: Performance degradation over time (monitor monthly)

- Explainability Score: Percentage of decisions with clear explanations (target: 100% for high-impact)

Compliance and Risk Metrics

- Policy Adherence: Percentage of AI implementations following governance protocols (target: 100%)

- Audit Findings: Number and severity of issues identified (trend toward zero critical findings)

- Time to Resolution: Days from issue identification to remediation (target: < 30 days)

- Regulatory Violations: Number of compliance breaches (target: zero)

Adoption and Trust Metrics

- Employee Trust Score: Survey results on AI trust and fairness (target: > 70%)

- AI Literacy Levels: Percentage of employees completing training (target: 100%)

- Tool Utilization Rates: Actual vs. expected AI tool usage (target: > 80% of expected)

- Feedback Volume: Employee submissions about AI concerns (monitor for trends)

Business Impact Metrics

- Efficiency Gains: Time savings from AI adoption (target: varies by use case)

- Quality Improvements: Better hiring decisions, reduced turnover, etc.

- Cost Savings: Reduction in process costs vs. manual approaches

- ROI: Net value delivered relative to AI investment and governance costs

8. Future-Proofing Your AI Policies

AI technology and regulatory landscapes evolve rapidly. Forward-thinking governance anticipates these changes:

Emerging Trends to Watch

Agentic AI and Autonomous Systems: By 2026, AI agents will autonomously complete complex, multi-step tasks with minimal human intervention. Governance frameworks must address: - When autonomous action is appropriate vs. requires human approval - Accountability when AI agents make unexpected decisions - Monitoring of agent-to-agent interactions

Multimodal AI Systems: As AI processes text, audio, video, and other data simultaneously, governance must cover: - Consent for new data modalities (e.g., video interview analysis) - Privacy protections across modalities - Bias auditing in multimodal contexts

Regulatory Evolution: Anticipate stricter regulations similar to the EU AI Act coming to other jurisdictions. Build governance that exceeds current compliance requirements to minimize future adjustment needs.

Staying current requires cultivating an AI-ready culture that embraces continuous learning and adaptation as core organizational capabilities.

Building Adaptive Governance

Future-proof frameworks share three characteristics:

1. Modular Design: Structure policies as modular components that can be updated independently as technology or regulations change, rather than monolithic documents requiring complete rewrites.

2. Continuous Learning Mechanisms: Embed regular reviews, environmental scanning, and policy updates into governance processes. Quarterly review cycles work well for most organizations.

3. Participatory Governance: Include mechanisms for employees, customers, and other stakeholders to provide input on AI governance. Their concerns often predict regulatory and reputational risks.

Conclusion & Next Steps

The AI governance gap represents one of the most significant challenges and opportunities facing HR leaders today. As AI transforms talent acquisition, development, and management, the organizations that thrive will be those that successfully balance innovation with human-centric values.

Key Takeaways

- The Governance Gap Is Real: While AI adoption accelerates, 67% of organizations lack adequate training and governance structures.

- Human-Centric Policies Deliver Value: Organizations with robust governance see better decisions, higher trust, lower risk, and superior ROI.

- Cross-Functional Collaboration Is Essential: Effective AI governance requires HR, IT, Legal, and Ethics working together.

- Implementation Is Iterative: Start with high-value use cases, learn, and scale gradually.

- Continuous Adaptation Is Required: AI and regulations evolve rapidly governance must too.

Your Next Steps

- Assess Your Current State: Conduct an AI governance maturity assessment to identify gaps.

- Build Your Coalition: Engage stakeholders from HR, IT, Legal, and leadership to champion governance efforts.

- Invest in Capabilities: Develop AI literacy across your workforce through structured training programs.

- Start Small and Scale: Pilot governance frameworks with one use case before expanding.

- Communicate Transparently: Keep employees informed about AI use, governance measures, and their rights.

How Edstellar Can Help

As you build your AI governance framework, equipping your HR team with specialized knowledge in AI ethics, bias auditing, and governance best practices is essential. Edstellar's expert-led training programs help HR leaders navigate the complexities of AI deployment while maintaining human-centric values.

Explore Edstellar's Training Solutions: - Artificial Intelligence Training Programs - Leadership Development for AI-Ready Organizations - Change Management Programs - Ethics and Compliance Training

Contact our training experts to design a customized AI governance training program for your organization.

Related Edstellar Articles

- Ethical Leadership in the Age of AI

- The Executive Guide to AI-Powered Workforce Management

- Building AI-Ready Culture: 5 Essential Shifts for 2026

- Role of AI in Enhancing Change Management Process

- 7 Ways Skills Intelligence is Changing HR Tech in 2026

- 19 Best Training Topics for HR Professionals in 2026

- What Is People-First Transformation? The Ultimate Roadmap

- Top 10 Change Management Frameworks for Organizations

Frequently Asked Questions

🔗 Continue Reading

Explore High-impact instructor-led training for your teams.

#On-site #Virtual #GroupTraining #Customized

Bridge the Gap Between Learning & Performance

Turn Your Training Programs Into Revenue Drivers.

Schedule a ConsultationEdstellar Training Catalog

Explore 2000+ industry ready instructor-led training programs.

Coaching that Unlocks Potential

Create dynamic leaders and cohesive teams. Learn more now!

Want to evaluate your team’s skill gaps?

Do a quick Skill gap analysis with Edstellar’s Free Skill Matrix tool

Transform Your L&D Strategy Today

Unlock premium resources, tools, and frameworks designed for HR and learning professionals. Our L&D Hub gives you everything needed to elevate your organization's training approach.

Access L&D Hub Resources.svg)

.svg)

.svg)

.svg)

.svg)

.svg)

.svg)