Modern work now moves at a speed that traditional learning systems cannot match. Roles shift quickly, workflows change with little warning, and teams often face problems that do not exist in any course or curriculum. Deloitte’s 2024 Human Capital Trends is clear about the core issue. Work is changing faster than L&D can publish content.

This is why peer learning is becoming essential. Organizations need learning models that help people interpret new situations in real time, not six months after the curriculum is updated.

From Old Communities of Practice to Modern Peer Learning Networks

Peer learning is not a new concept. It is the natural evolution of what earlier Communities of Practice once offered. Those early groups focused on shared interests, informal discussion, and cultural alignment. They worked in stable environments where work changed slowly, and teams had time to gather, reflect, and socialize knowledge.

The context today is very different. Work is faster, more fragmented, and more interdependent. Teams face novel challenges every quarter. In this environment, practitioners need learning structures that are more active, more embedded in daily work, and more accountable to business outcomes.

Here is the shift in simple terms:

This evolution is not cosmetic. It is a response to a real capability gap.

Research links the majority of tacit knowledge transfer to informal learning processes: for example, one study estimates that 70-90% of workplace learning is informal, and that tacit knowledge is captured through those channels. If organizations do not create a system to harness that flow, they leave capability development to chance.

Why Traditional Training Cannot Keep Up

Static courses cannot keep pace with workflows that change weekly. Employees do not need more content. They need spaces where they can compare what they are noticing, examine emerging challenges together, and apply new insights immediately. These interpretation spaces are what traditional L&D systems do not provide.

Most of the knowledge that matters today is tacit knowledge. It lives in judgment, pattern recognition, decision shortcuts, and lived experience. AI only increases this need. It introduces new problems faster, and teams must make sense of them together. Peer learning is the only model that can respond at the same speed as the work.

Why Peer Learning Has Become the New Capability Engine

This is where modern peer learning moves from being helpful to being strategic. Inspired by the rise of Capability Academies, organizations are converting peer learning into structured operating systems that produce playbooks, shared methods, standards, and measurable performance gains. These networks are not interest groups. They are practitioner-led, business-backed systems designed to keep teams aligned and adaptive.

Peer learning is no longer an add-on. It has become the connective tissue that moves tacit knowledge across teams, accelerates problem-solving, and reduces the risk of capability gaps during periods of rapid change. If organizations do not build these networks intentionally, they create a structural disadvantage that becomes visible during every transformation effort, every project pivot, and every instance of knowledge loss.

How to Build a Peer Learning System That Actually Improves Performance

Most peer learning efforts fail long before the first session. The intent is strong, the idea is sound, but the system is weak. The team launches without a sponsor, roles are unclear, sessions drift into presentations, and no one tracks whether anything changes in real work. The result is predictable: after the initial enthusiasm fades, participation collapses and leaders conclude that “peer learning doesn’t work.”

It is not the concept that fails. It is the design.

A high-performing peer learning network needs a structure that supports momentum, protects focus, and converts conversation into capability. The system must work inside real organisational constraints such as bandwidth limits, cross-functional politics, and fluctuating sponsorship attention.

Here is how to build one that improves performance instead of becoming another meeting series.

1. Build a Clear, Business-Aligned Peer Learning Charter

A peer learning network lives or dies on its charter. This document does not describe the community’s aspirations. It defines the business results the group is accountable for. Without this anchor, everything downstream becomes performative, and the network loses relevance as soon as priorities shift.

A strong charter links the network to specific enterprise priorities such as reducing defect rates, improving time-to-proficiency, strengthening customer experience, or reducing operational cycle times. A weak charter talks about community building or knowledge sharing without any measurable purpose.

A bad charter in action:

A global operations team launched a peer circle to “share best practices.” Because the purpose was vague, every session turned into a random update meeting. Within six weeks, senior members stopped attending, and the sponsor withdrew support.

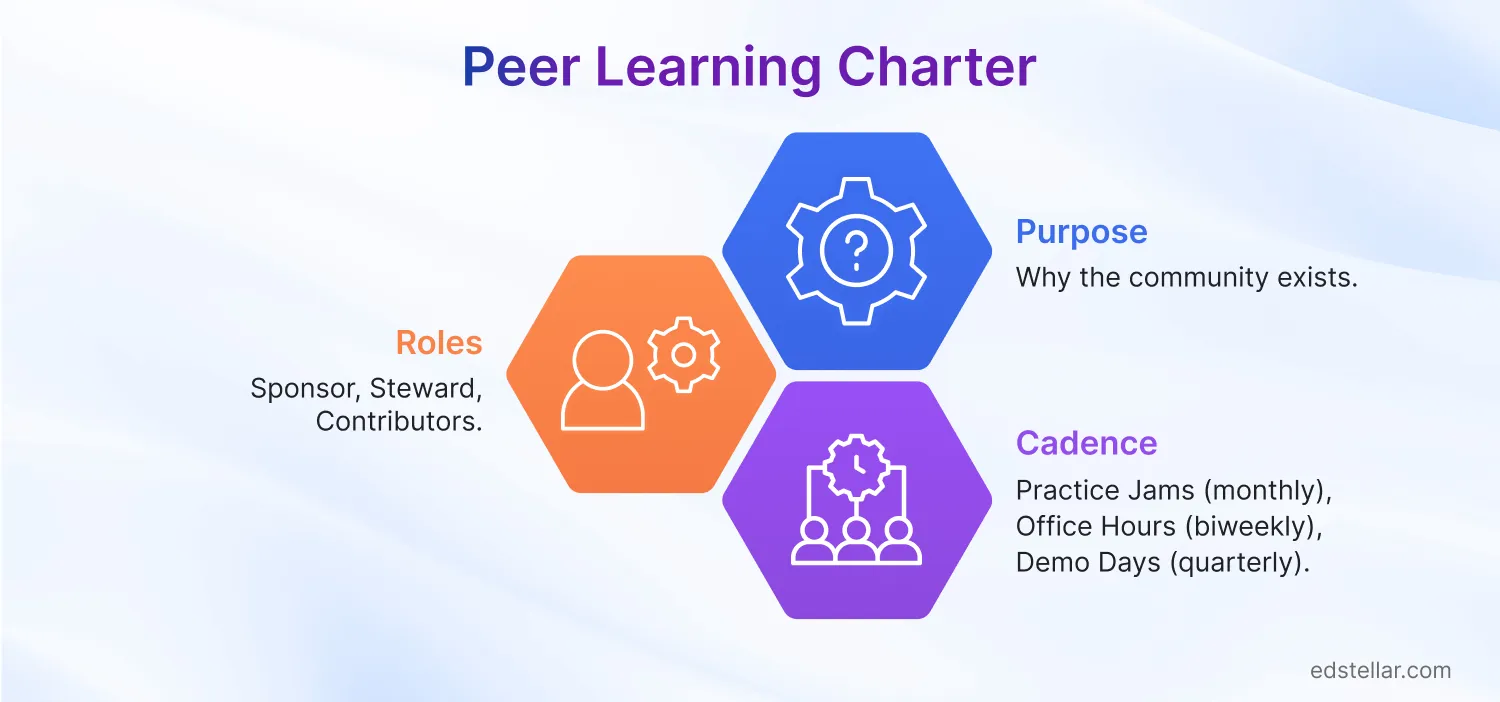

A strong charter answers three questions with precision:

- Purpose: The exact problem this network exists to improve

- Roles: Who sponsors, stewards, and contributes

- Cadence: When the network meets and what each session produces

Charter Red Flags:

- Purpose describes community, not outcomes

- No connection to business metrics

- Roles assigned based on availability instead of capability

- Cadence left “flexible”

2. Define Roles and Lightweight Governance That Enable Scale

Peer learning systems break when ownership is unclear or when the wrong people are placed in critical roles. Many organisations appoint the most available facilitator instead of the most capable. This choice alone can stall momentum for months.

Most failures stem from predictable patterns: unclear role expectations, weak facilitator preparation, inconsistent follow-up, and no mechanism to maintain focus. Governance does not need to be heavy. It needs to be explicit enough to prevent drift.

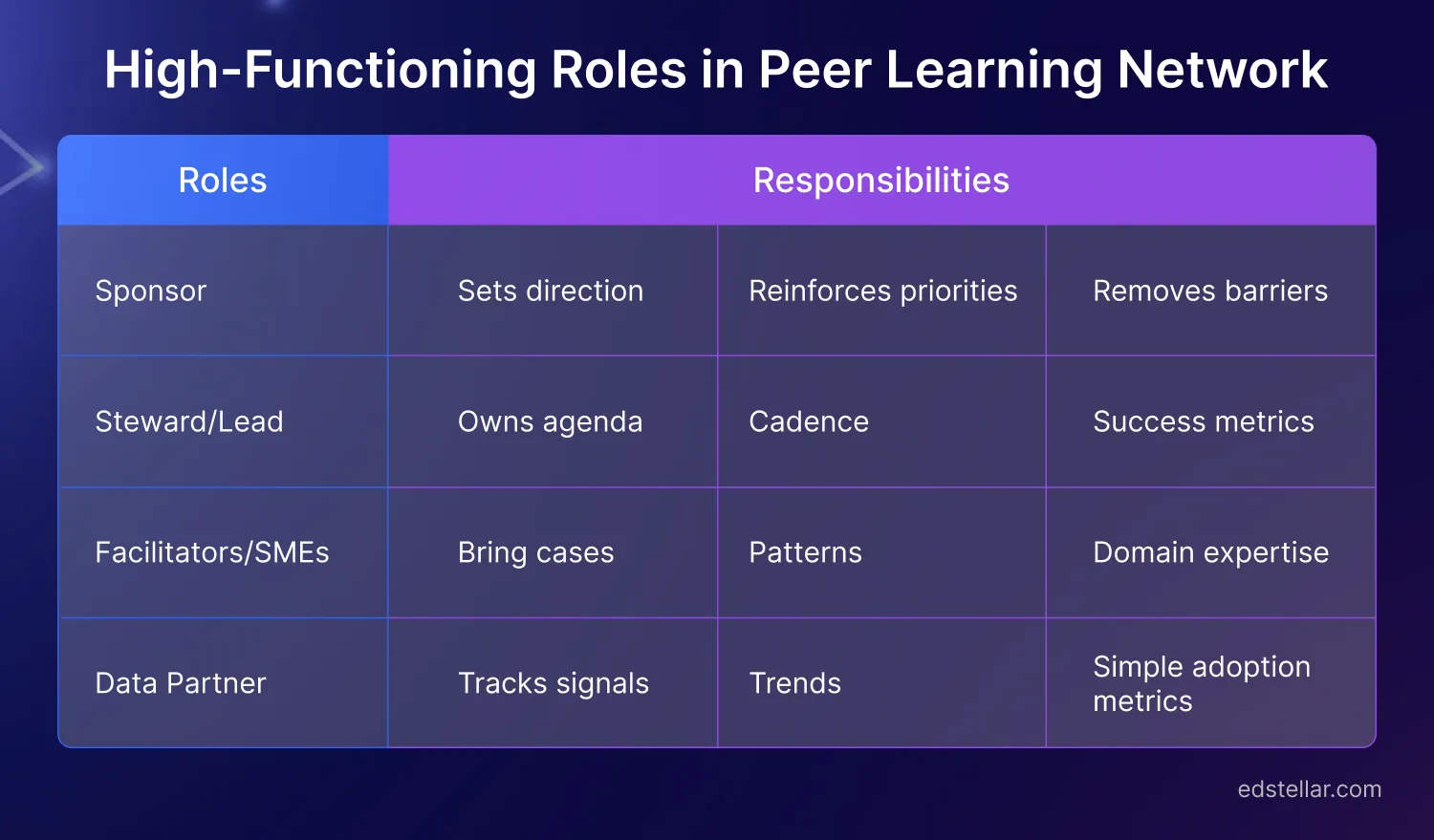

A high-functioning peer learning network needs only four roles:

- Sponsor: Sets direction, reinforces priorities, removes barriers

- Steward or Lead: Owns agenda, cadence, and success metrics

- Facilitators or SMEs: Bring cases, patterns, and domain expertise

- Data Partner: Tracks signals, trends, and simple adoption metrics

Governance protects momentum. It does not create it. That still depends on the quality of facilitation, the relevance of cases, and the sponsorship pull.

Role Misalignment Symptoms:

- Sessions become updates instead of problem-solving

- Facilitators arrive unprepared or rely on slide decks

- The steward becomes a bottleneck because others do not contribute

- The sponsor disappears after kickoff

Minimal RACI for Peer Learning:

Sponsor = Accountable

Steward = Responsible

Facilitators = Consulted

Data Partner = Contributor

3. Design Peer Learning Sessions for Application

The fastest way to kill a peer learning network is to let sessions turn into presentation theaters. People listen, nod, and leave unchanged. If the session does not alter someone’s work the next day, it serves no purpose.

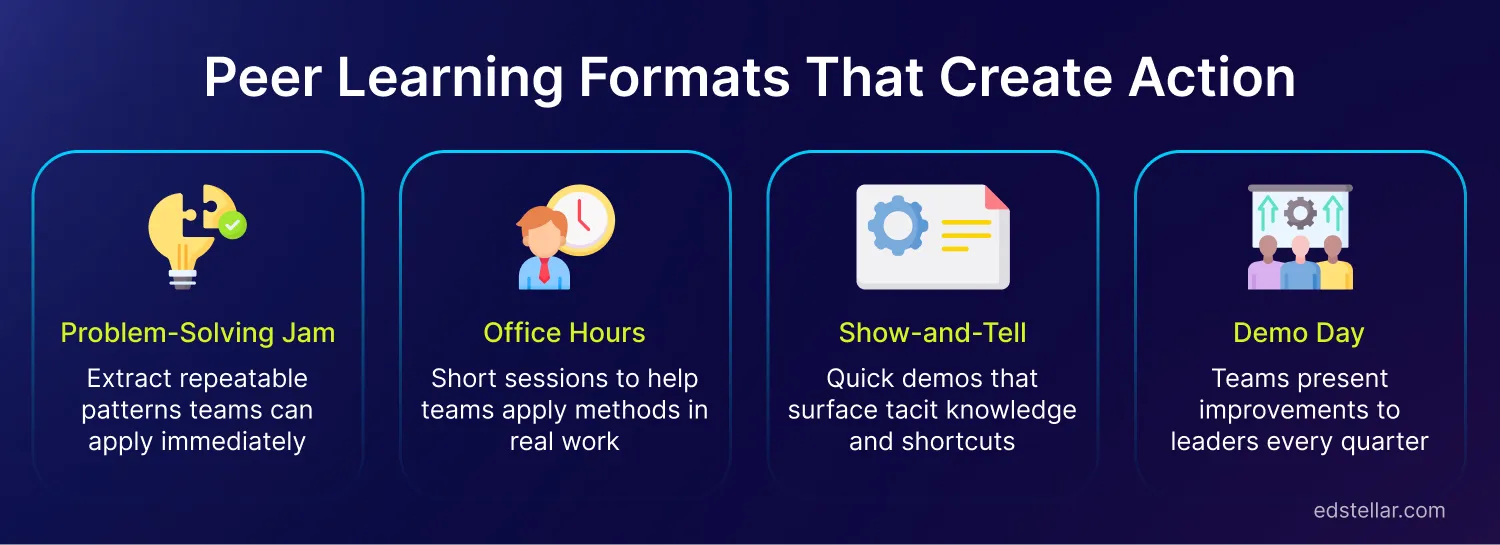

High-impact peer learning relies on formats that create action:

- Problem-Solving Jams: Break down real cases and extract a repeatable pattern

- Office Hours: Short sessions to unblock teams applying those patterns

- Show-and-Tell Demos: Before and after examples that surface tacit knowledge

- Demo Day: A quarterly forum where teams present to leaders

These formats work because they sit inside the flow of work, expose decision-making logic, and convert individual experience into shared capability.

Session Pitfalls to Avoid

- Slides instead of cases

- Facilitators lecturing instead of guiding

- Members arriving without a problem to discuss

- Discussions are drifting without capturing patterns

A simple Facilitator Script:

- Present the case

- Break down what happened

- Extract the pattern

- Agree on where it applies next

- Capture it in the playbook

These formats do exactly that because they:

- Happen inside the flow of work

- Accelerate collective problem-solving

- Expose tacit knowledge

- Create reusable patterns

- Strengthen peer networks

4. Run Peer Learning in 90-Day Sprints for Momentum & Discipline

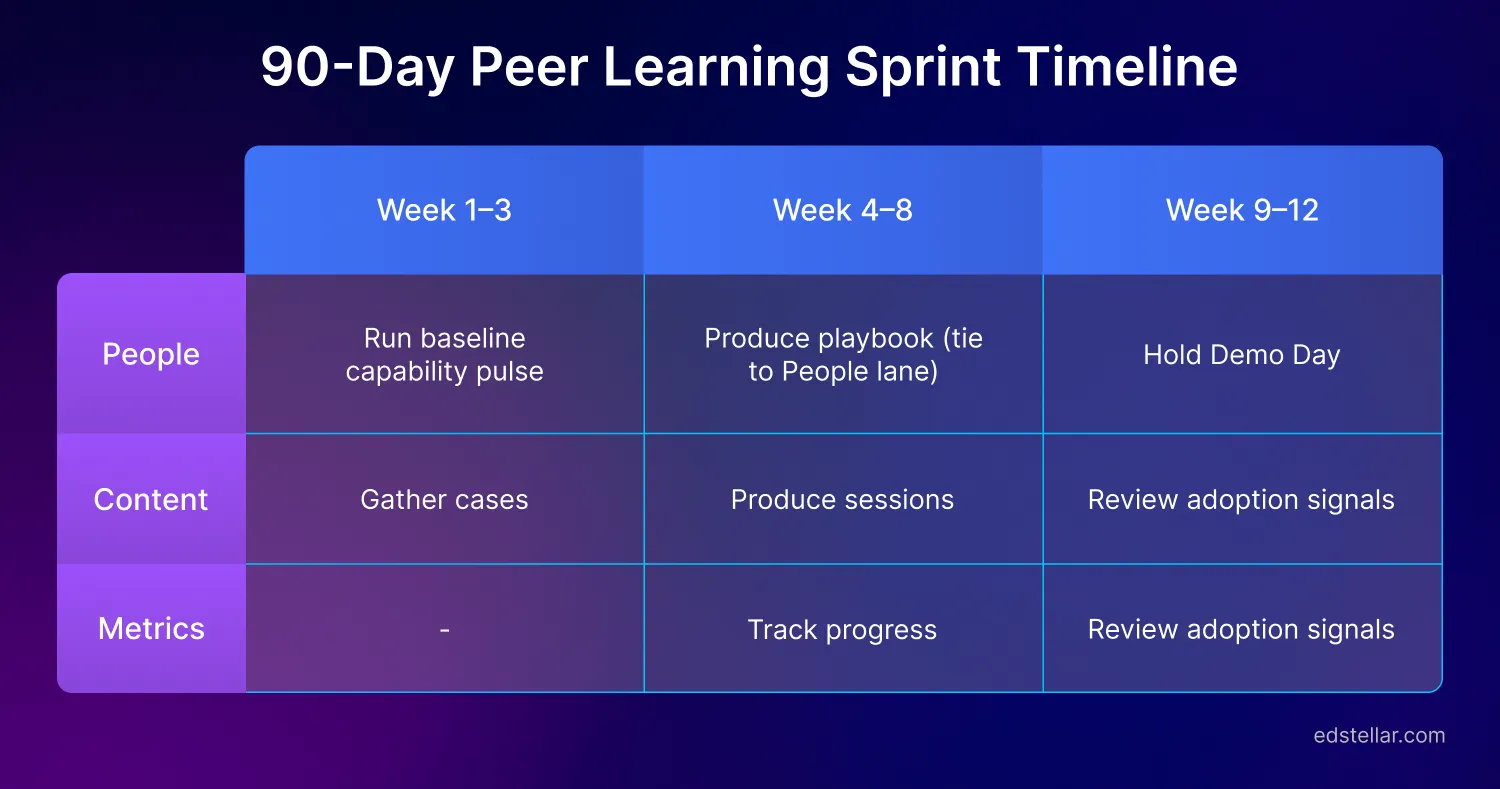

Peer learning collapses when the cadence is open-ended. A 90-day sprint cycle provides urgency, structure, and natural checkpoints that align with executive calendars and delivery cycles.

A simple rhythm works best:

Weeks 1 to 3:

- Run a baseline capability pulse

- Gather cases

- Refresh or finalize the charter

Weeks 4 to 8:

- Host two to three high-value sessions

- Produce version 0.1 of your playbook

Weeks 9 to 12:

- Hold Demo Day

- Review adoption signals

- Decide what to continue, pause, or redesign

- Lock the next quarter’s goals

Skipping any part of this rhythm creates drift. Skipping Demo Day, especially, removes sponsor visibility and weakens the network’s legitimacy.

Why 90 days works:

- Executives think in quarterly cycles

- Teams can commit to short bursts of focus

- Learning loops are tight enough to show impact

- Patterns emerge quickly without overwhelming contributors

Peer Learning Launch Kit: Your Starter System for the First 90 Days

A simple operating system prevents the collapse of peer learning networks. Not a binder. Not a theoretical model.

A practical, ready-to-use toolkit that removes guesswork and gives your network a clear path for the first 90 days while it finds its rhythm.

The Peer Learning Starter Kit (Excel) was created for this exact purpose. It gives you the essential templates you need: the charter, the roles, the cadence, the case intake process, and the starter metrics already laid out.

It does not replace leadership or facilitation capability. It eliminates the 30 to 60 days most teams waste deciding how to start.

If you want to avoid chaos and move straight to impact, this is the easiest way to begin.

What’s Inside, Only the Essentials:

The kit is intentionally minimal. It gives you building blocks, not bureaucracy.

- Peer Learning Charter Template: A one-page anchor that defines purpose, roles, cadence, success measures, and boundaries.

- Roles and RACI Snapshot: Clear ownership that prevents contribution from collapsing onto one person.

- 90-Day Cycle Planner: A prebuilt rhythm your team can use immediately without designing anything from scratch.

- Case and Content Intake Sheet: A simple way to surface real work problems and convert them into structured learning sessions.

- Starter Metrics Tracker: A lightweight sheet to track early adoption signals and ensure the network builds momentum.

When to Use This Kit:

Use this kit when:

- Your team is ready to launch peer learning, but does not know how to structure it

- You cannot afford to waste the first few weeks figuring out how to begin

- You need to show early progress to sponsors within the first quarter

- You want a simple, battle-tested operating system instead of experimenting with formats

- You want clarity before you invest additional time or political capital

If you start without structure, you will burn goodwill within weeks. This kit prevents that.

Before You Move On:

Launching a peer learning network is only the first stage. Sustaining it and proving its value is what determines whether it becomes a respected capability engine or fades into “another meeting.”

If you do not measure adoption, behaviour change, or business outcomes, the network will lose sponsor support by month three.

This is why the next article is essential.

Next Article: How to Measure Peer Learning and Avoid the Failure Traps

If the Starter Kit is your launch system, the next guide is how you keep the network funded, respected, and tied to real business impact.

Conclusion

Peer learning is a simple idea at its core. The people who do the work come together, compare what they are seeing, make sense of emerging challenges, and turn shared insight into shared practice. What converts that simplicity into a strategic advantage is the system around it: a clear purpose, defined roles, sessions anchored in real work, and a 90-day rhythm that keeps progress moving forward.

You do not need multiple learning groups to prove the model. You need one mission-critical domain, one sponsor who cares about outcomes, and one disciplined cycle where conversations turn into patterns and patterns turn into measurable improvements. When that happens, you are no longer running a learning initiative. You are building a capability engine that helps your organization learn faster than the environment is changing.

The window for capability transformation is narrowing. Workflows are shifting monthly. AI is rewriting how decisions are made. Teams cannot wait for slower, traditional approaches to catch up. A well-designed peer learning network gives your organization a practical way to move experience, strengthen judgment, and improve performance in real time.

If you want to launch this with structure instead of guesswork, Edstellar can support you from design to execution. Our L&D Consulting Service helps organizations build peer-learning charters that align with business outcomes, establish sustainable operating rhythms, create applied playbooks, and set up measurement systems that prove impact.

Pick a capability that truly matters, launch a focused peer learning network, and let the first 90 days demonstrate what disciplined practitioner learning can accomplish.

In a world where complexity increases every week, peer learning is not a program. It is your competitive advantage.

Frequently Asked Questions

🔗 Continue Reading

Explore High-impact instructor-led training for your teams.

#On-site #Virtual #GroupTraining #Customized

Bridge the Gap Between Learning & Performance

Turn Your Training Programs Into Revenue Drivers.

Schedule a ConsultationEdstellar Training Catalog

Explore 2000+ industry ready instructor-led training programs.

Coaching that Unlocks Potential

Create dynamic leaders and cohesive teams. Learn more now!

Want to evaluate your team’s skill gaps?

Do a quick Skill gap analysis with Edstellar’s Free Skill Matrix tool

Transform Your L&D Strategy Today

Unlock premium resources, tools, and frameworks designed for HR and learning professionals. Our L&D Hub gives you everything needed to elevate your organization's training approach.

Access L&D Hub Resources.svg)

.svg)

.svg)

.webp)

.svg)

.svg)

.svg)

.svg)